Terraform Remote States in S3 with Github Actions

Written by Donna Kmetz

Tutorials

**Written by Malcon, Software Developer.**

HashiCorp Terraform is the most popular and open-source tool for infrastructure automation. It helps in configuring, provisioning, and managing the infrastructure as code. With terraform, you can easily plan and create IaC across multiple infrastructure providers with the same workflow.

Today we are going to explore one way to share and lock one of terraform's core components the [state file](https://www.terraform.io/language/state) in AWS S3 bucket and using Github Actions as our GitOps tool.

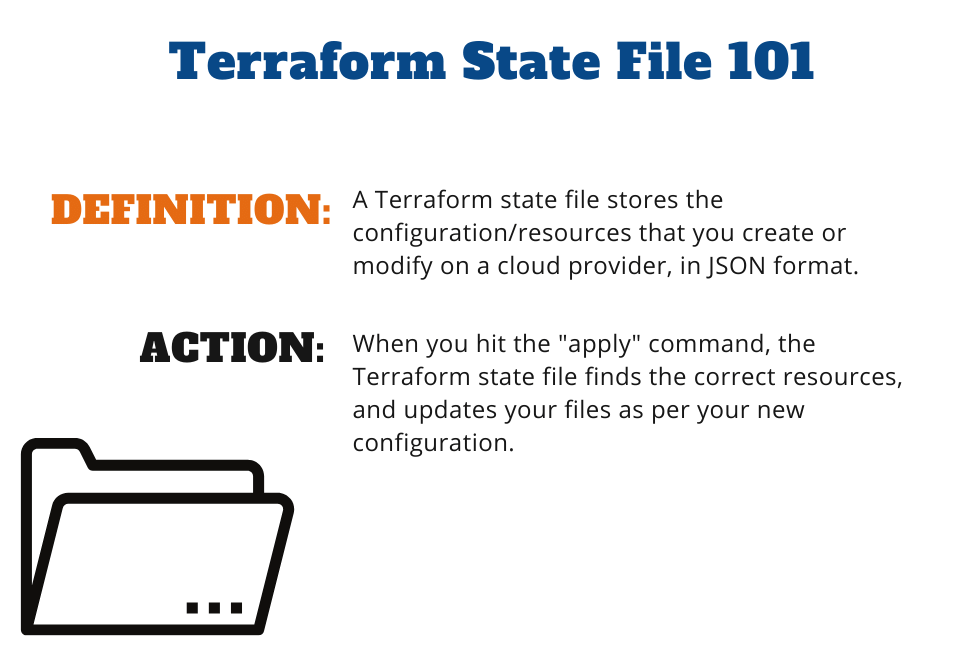

## What is Terraform state?

If you’re using Terraform for a personal project, storing state in a local `terraform.tfstate` file works just fine. But if you want to use Terraform as a team on a real product, you run into several problems:

**Shared storage for state files:** To be able to use Terraform to update your infrastructure, each of your team members needs access to the same Terraform state files. That means you need to store those files in a shared location.

**Locking state files:** As soon as data is shared, you run into a new problem: locking. Without locking, if two team members are running Terraform at the same time, you may run into race conditions as multiple Terraform processes make concurrent updates to the state files, leading to conflicts, data loss, and state file corruption.

In the following sections, we’ll dive into each of these problems and show you how to solve them.

## This is (not) the way

The most common technique for allowing multiple team members to access a common set of files is to put them in version control (e.g. Git). With Terraform state, this is a Bad Idea for the following reasons:

**Manual error:** It’s too easy to forget to pull down the latest changes from version control before running Terraform or to push your latest changes to version control after running Terraform. It’s just a matter of time before someone on your team runs Terraform with out-of-date state files and as a result, accidentally rolls back or duplicates previous deployments.

**Locking:** Most version control systems do not provide any form of locking that would prevent two team members from running terraform apply on the same state file at the same time.

**Secrets:** All data in Terraform state files is stored in plain text. This is a problem because certain Terraform resources need to store sensitive data. For example, if you use the aws_db_instance resource to create a database, Terraform will store the username and password for the database in a state file in plain text. Storing plain-text secrets anywhere is a bad idea, including version control.

Instead of using version control, **the best way to manage shared storage for state files is to use Terraform’s built-in support for remote backends**. A Terraform backend determines how Terraform loads and stores state. The default backend, which you’ve been using this whole time, is the local backend, which stores the state file on your local disk. Remote backends allow you to store the state file in a remote, shared store. A number of remote backends are supported, including Amazon S3, Azure Storage, Google Cloud Storage, and HashiCorp’s Terraform Pro and Terraform Enterprise.

## Remote backends with GitOps

If you’re using Terraform with AWS, Amazon S3 (Simple Storage Service), which is Amazon’s managed file store, is typically your best bet as a remote backend for the following reasons:

- It’s a managed service, so you don’t have to deploy and manage extra infrastructure to use it.

- It’s designed for 99.999999999% durability and 99.99% availability, which means you don’t have to worry too much about data loss or outages.

- It supports encryption, which reduces worries about storing sensitive data in state files.

- It supports locking via DynamoDB. More on this below.

- It supports versioning, so every revision of your state file is stored, and you can roll back to an older version if something goes wrong.

- It’s inexpensive, with most Terraform usage easily fitting into the free tier.

Multiple writes at the same time can led to a place that we don't want to right? to prevent and control this behaviour we are going to use DynamoDB.

Last but not least we are going to perform CI-CD to those resources with Github Actions, linting the terraform code and deploying into our AWS account using git as the source of truth.

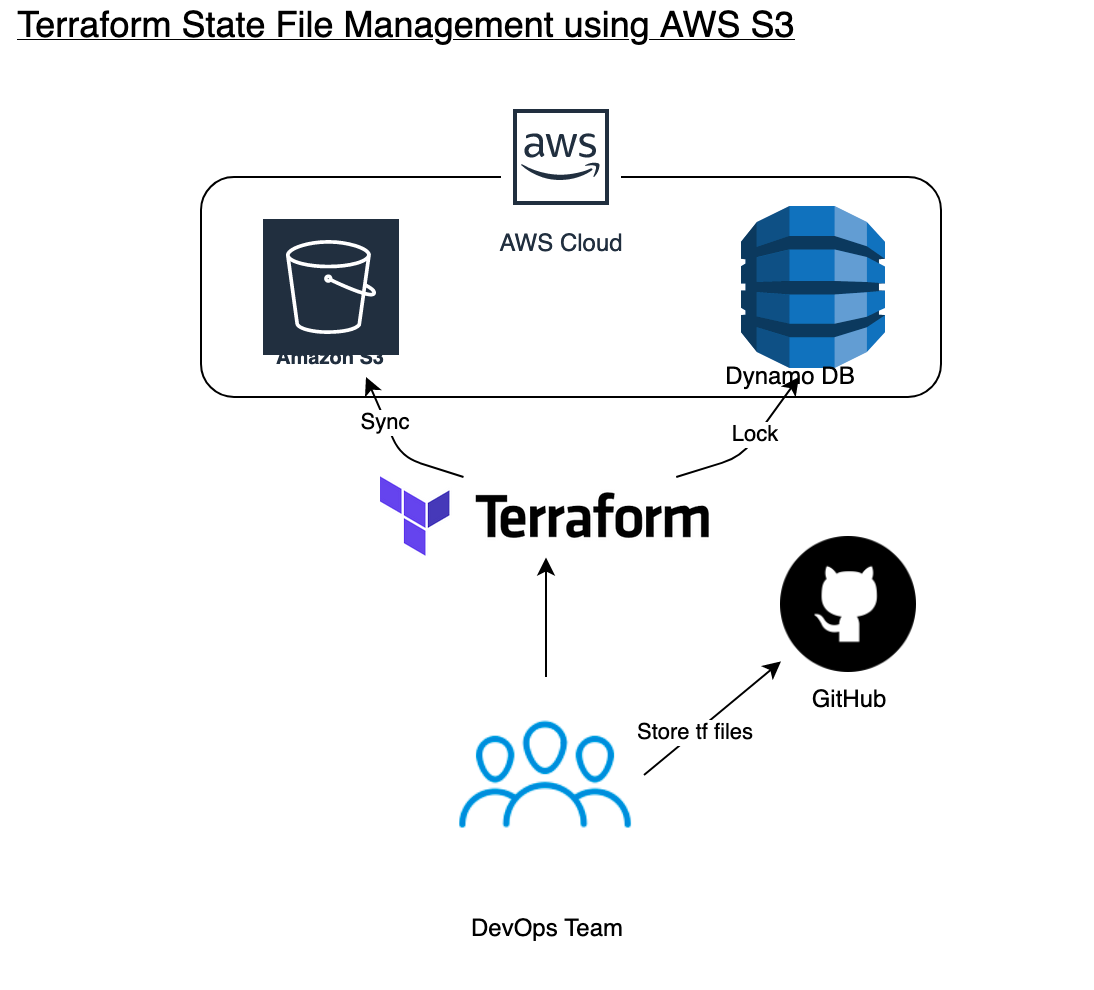

The solution will look like this:

## Getting ours hands Dirty!

## Setup Steps

Pre-requisites:

* A setup AWS account

* Git installed on your machine

* A Github Account

* A Github repository

### Step 1: Create the backend bucket

1. Clone the repo `git@github.com:malconip/terraform-tfstate.git`

2. Install the [Terraform](https://www.terraform.io/downloads.html) binary

3. Set your bash variables locally

* `export AWS_ACCESS_KEY_ID=[your-key]`

* `export AWS_SECRET_ACCESS_KEY=[your-key]`

4. `terraform init` to initialise Terraform

5. Update the `main.tf` file and set `bucket` property of the backend and s3 resource blocks (yes, even the one that's commented out, we'll need it as part of step 8)

6. Execute `terraform apply` (type `yes`)

### Step 2: Run Terrafrom on Github Actions

7. Uncomment the backend configuration in `main.tf`

8. Execute `terraform init` (type `yes` to move your state)

9. Set your AWS `AWS_ACCESS_KEY_ID` and `AWS_SECRET_ACCESS_KEY` as repo secrets @ github.com/[your-username]/[your-repo]/settings/secrets/new

10. `git add .` and `git commit -m "First commit"` to commit any changes

11. `git push` to push to github

## Conclusion

Now we can work as a team and push any bussiness foward, but beware padawan, this is the first step and we have a lot of other questions to answer:

- How to share between multiple accounts?

- How to protect the state file?

- How to use those states as data sources?

Perhaps, another blog post about this?

--

If you want to stay up to date with all the new content we publish on our blog, share your email and hit the subscribe button.

Also, feel free to browse through the other sections of the blog where you can find many other amazing articles on: Programming, IT, Outsourcing, and even Management.

Donna Kmetz is a business writer with a background in Healthcare, Education, and Linguistics. Her work has included SEO optimization for diverse industries, specialty course creation, and RFP/grant development. Donna is currently the Staff Writer at Jobsity, where she creates compelling content to educate readers and drive the company brand.